Welcome to this Full-Stack presentation!

Feel free to browse through projects via the blue links provided below. Enjoy this digital experience of problem solving and critical thinking.

Let's get started!

Part 1:

Go to Project 1 - Data Engineering

Go to Project 2 - Data Science

Go to Project 3 - Analytics and Visual Ouput

Part 2:

Go to Project 4 - Cloud Computing

Go to Project 5 - Unit Testing

Go to Project 6 - CyberSecurity

Go to Project 7 - GDPR Offuscator Draft

Go to Project 8 - Refactoring Machine Learning processes

Deployment and presentation

The projects presented here are all interconnected. The task here is to build an automated data management system that will feed a Deep Learning Neural Network for predictions. Further illustrations will be presented along to provide further references.

You can visit the Git repository for this portfolio website via the following link: Visit the Git repository

Project 1 - Data Engineering: Building an ETL ingestion Pipeline

Description

In this section an automated ETL (Extract / Transform / Load) pipeline is being setup to handle data from a remote database. The pipeline output is an ingestion data lake connected a local data warehouse where processed data are stored in a STAR schema (relational databases) for further processing in the Data Science project that will follow.

Relational databases can created with PostgreSQL which can be intalled with the following command on Linux:

pip install psql

A virtual environment is then created for testing purposes to ensure that the software is robust and always working. For testing the library Pytest is being used while a MakeFile ensures automation during deployment.

A virtual environment can be created with the following commands:

pip install pyenv

python -m venv venv

Loading the virtual environment:

source venv/bin/activate

Exporting the python path to the environment:

export PYTHONPATH=$PWD

Pytest can be installed in the virtual environment with the following command:

pip install pytest

A requirement file is ideal in this case to store all the list of packages required for the project and to be installed all at once:

pip freeze > requirements.txt

pip install -r requirements.txt

Autopep8 is added to the list for PEP-8 compliance.

Safety and Bandit check for any issues such as SQL injections.

Coverage is a package that checks how much of the code is being tested.

The entire automation procedure is then deployed remotely, essentially by running a Makefile in Bash script.

The dataset

The example dataset is accessed via the following link:

\link>

\img>

Those data are static for now but ideally the management system should eventually be able to handle new sets of data dynamically using a cloud computing platform such as Amazon Web Services (AWS) and Terraform. You can view a version of a pipeline previously built with AWS and AWS Lambda

The data consist of a training set and a testing set each detailling screen dots with x and y coordinates from handwritten data from a touch-screen device. Validation data are also included in this case which means it is possible to assess whether the model's predictions were true or not.

MVP: Most Valuable Product

The bot must accurately ingest the data and carry timestamp processing tasks, then store the procesed data in a data warehouse for the purpose of the Data Science project, all in order to translate the hand written letters.

The Code: Python 3 and Pandas libraries

Pandas libraries provide robust packages that enable processors to handle pkl formats and Json protocols efficiently. The code for ingestion is pretty straightforward and looks as below:

#import Pandas libraries

import pandas as pd

# Loading the training dataset

# An util folder may ideally be created later for reused functions

def load_data():

f = gzip.open('mnist.pkl.gz', 'rb')

f.seek(0)

training_data, validation_data, test_data = pickle.load(f, encoding='latin1')

f.close()

return (training_data, validation_data, test_data)

training_data, validation_data, test_data = load_data()

training_data

# Printing datasets details

print("The feature dataset is:" + str(training_data[0]))

print("The target dataset is:" + str(training_data[1]))

print("The number of examples in the training dataset is:" + str(len(training_data[0])))

print("The number of points in a single input is:" + str(len(training_data[0][1])))

The Data Warehouse

The data warehouse is designed in three stages:

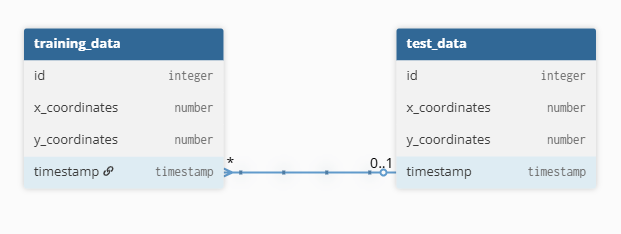

- Concept: Storing loaded training and test datasets in a relational database in a STAR schema. The data warehouse will contain two tables: the training table and the test table.

- Logic: Primary keys and timestamps are assigned to data points from the data sets to allow dynanamic data tracking and time series analysis.

- Physic: PostgreSQL will be used to create the database and relational tables via the python script. The tables will be linked by the timestamps attribute in the Entity Relationship Diagram.

The local database and tables can be created using the Psycopg2 library. A dedicated warehouse_handler.py facilitate debugging while carrying this task.

Let's create our data warehouse with psql using Psycopg2:

import psycopg2

from psycopg2 import sql

try:

# Connecting to the default 'postgres' database

connection = psycopg2.connect(

dbname="postgres",

user="hgrv",

password="hgrv_pass",

host="localhost",

port="5432"

)

connection.autocommit = True # Enable autocommit for database creation

# Creating a cursor object for SQL script

cursor = connection.cursor()

# Checking whether the database already exists

cursor.execute(

sql.SQL("SELECT 1 FROM pg_database WHERE datname = %s"),

[data_warehouse]

)

exists = cursor.fetchone()

# Creating the table for the first instance

if not exists:

# Defining a name for the database for the first instance

database_name = "data_warehouse"

# Executing a SQL command to create the database if it does not already exist (first instance only)

cursor.execute(sql.SQL("CREATE DATABASE {}").format(sql.Identifier(database_name)))

print(f"Database '{data_warehouse}' created successfully!")

else:

print(f"Database '{data_warehouse}' already exists.")

except Exception as e:

print(f"Error: {e}")

print(f"Check the debugging console for any error in the SQL script.")

Note: The same result is achievable with Pg8000 (ideal for low-load applications without C-denpendencies). You can check the specific differences between Pg8000 and psycopg2 on the following link: https://www.geeksforgeeks.org/python/difference-between-psycopg2-and-pg8000-in-python/

Let's add the tables that will store the following corresponding attributes: 'id' (primary key), 'x-coordinates' and 'y-coordinates'. The connection and cursor

try:

# SQL query to create a table

create_table_query = """

CREATE TABLE IF NOT EXISTS test_data (

id SERIAL PRIMARY KEY,

x_coordinates NUMERIC(10, 2),

y_coordinates NUMERIC(10, 2),

timestamp DATE DEFAULT CURRENT_DATE

);

CREATE TABLE IF NOT EXISTS training_data (

id SERIAL PRIMARY KEY,

x_coordinates NUMERIC(10, 2),

y_coordinates NUMERIC(10, 2),

timestamp DATE

);

"""

# Executing the query

cursor.execute(create_table_query)

connection.commit() # Save changes to the database

print("Table 'employees' created successfully!")

except Exception as error:

print(f"Error occurred: {error}")

print(f"Check for any error in the SQL script.")

At this point, the data warehouse can be updated with the current load of data sets:

try:

# Data to insert

# training_data_to_insert = (train_set_x, train_set_y, timestamp_current)

# test_data_to_insert = (test_set_x, test_set_y, timestamp_current)

# Inserting x-coordinates, and timestamp into table 'training_data'

for item in train_set_x:

# Data to insert

training_data_to_insert = item

# SQL query to insert data into tables

insert_query = """

INSERT INTO training_data (x_coordinates, timestamp)

VALUES (train_set_x, timestamp_current)

"""

# Execute the query

cursor.execute(insert_query, training_data_to_insert)

# Commit the SQL script

connection.commit()

# Inserting y-coordinates into table 'training_data'

for item in train_set_y:

# Data to insert

training_data_to_insert = item

# SQL query to insert data into tables

insert_query = """

INSERT INTO training_data (y_coordinates)

VALUES (item, timestamp_current)

"""

# Execute the query

cursor.execute(insert_query, training_data_to_insert)

# Commit the SQL script

connection.commit()

# Inserting x-coordinates, and timestamp into table 'test_data'

for item in test_set_x:

# Data to insert

test_data_to_insert = item

# SQL query to insert data into tables

insert_query = """

INSERT INTO test_data (x_coordinates, timestamp)

VALUES (item, timestamp_current)

"""

# Execute the query

cursor.execute(insert_query, test_data_to_insert)

# Commit the SQL script

connection.commit()

# Inserting y-coordinates into table 'test_data'

for item in train_set_y:

# Data to insert

test_data_to_insert = item

# SQL query to insert data into tables

insert_query = """

INSERT INTO test_data (y_coordinates)

VALUES (item, timestamp_current)

"""

# Execute the query

cursor.execute(insert_query, test_data_to_insert)

# Commit the SQL script

connection.commit()

print("Data inserted successfully into {data_warehouse}!")

except Exception as error:

print(f"Error occurred: {error}")

print(f"Check for any error in the SQL script.")

finally:

# Closing the cursor and connection

cursor.close()

connection.close()

print("PostgreSQL connection is now closed.")

These 'try-except-finally' clauses are then put together in a function update_data_base() invoqued in the neural_layers.py file. We must also ensure that there are no duplicates due to the random pixel effect of handwriting on a touchscreen or touchpad.

In later projects when setting up the enpoint of an API, those SQL commands must always ensure that no injection occurs during queries.

Setting up an API (Application Programming Interface)

To make the designed platform more interactive, engaging and intersting, an API can be built to request the website user to suggest optimisation parameters before running the projects scripts, for the purpose of the following Data Science project:

import requests

def get_suggestion(number):

url = "http://numbersapi.com/{}".format(number)

r = requests.get(url)

if r.status_code == 200:

print(r.text)

else:

print("An error occurred, code={}".format(r.status_code))

The code can be refactored later in the project in order to intercept errors with user friendly messages and hints for conflicts resolution. This API can then be ran in the corresponding app or module as follows:

import api

api.get_suggestion(input("Enter a number to suggest layers density (Ex: 35): "))

APIs essentially act as simplified communication bridges between various software components and devices.

Automation and Orchestration

The orchestration of dependencies is then updated in the Makefile to reflect requirements changes and refresh the virtual environment for processing purposes, deep learning and automation:

# Define the variables for the Python interpreter

VENV = venv

PYTHON = $(VENV)/bin/python3

PIP = $(VENV)/bin/pip

# Declare phony target with no prerequisites

.PHONY: run clean test pythonpath

# Creating first rule to run the app in the virtual environment:

run: venv/bin/activate

$(PYTHON) src/app.py

# Run the neural network in the virtual environment with the app as prerequisite

run_neural_network: venv/bin/activate, run

$(PYTHON) src/neural_layers.py

# Creating virtual environment with updated dependencies

venv/bin/activate: requirements.txt

python3 -m venv venv

$(PIP) install -r requirements.txt

# Setting the python path to the current working directory

pythonpath: venv/bin/activate

export PYTHONPATH=$PWD

# Run tests

test: venv/bin/activate

$(PYTHON) -m unittest discover -s tests

# Clean up .pyc files and refresh

clean:

find . -name "*.pyc" -delete

rm -rf __pycache__

rm -rf venv

Once all the handling modules have been set, a backend neural network for deep learning can be added at the end of the pipeline to generate relevant visual outputs and predictive metrics.